Around June 25 the Publish team changed the Pro upgrade paths in New Publish. Instead of directly upgrading to a Pro plan, the upgrade paths prompted users to start trials.

Based on the data in this analysis, we estimate the effect of this change to be a 97% increase in Pro trial starts and a 16% decrease in new MRR for the monthly Pro plan. Both of the estimated effects are statistically significant.

Data Collection

Let’s collect the all of the Pro subscriptions created this year from BigQuery.

# connect to BigQuery

con <- dbConnect(

bigrquery::bigquery(),

project = "buffer-data",

dataset = "dbt_buffer"

)

# define sql query

sql <- "

select

s.id

, date(s.created_at) as created_date

, s.customer_id

, s.plan_id

, count(distinct i.id) as paid_invoices

from stripe_subscriptions s

inner join stripe_invoices i

on s.id = i.subscription_id

and i.paid

and i.amount_due > 0

where date(s.created_at) >= '2019-01-01'

and s.plan_id in ('pro_v1_monthly', 'pro_v1_yearly')

group by 1,2,3,4

"

# collect data

subs <- dbGetQuery(con, sql)There are around 20K subscriptions in this dataset. We’ll also want to collect trials data to measure the effect on trials.

# define sql query

trials_sql <- "

select distinct

t.id

, date(t.trial_start_at) as trial_start_at

, t.plan_id

, t.customer_id

, t.subscription_id

from stripe_trials t

where date(t.trial_start_at) >= '2019-01-01'

and t.plan_id in ('pro_v1_monthly', 'pro_v1_yearly')

"

# collect data

trials <- dbGetQuery(con, trials_sql)We should also collect MRR data for the Pro plans.

# collect pro MRR data

mrr <- get_mrr_metrics(metric = "mrr",

start_date = "2019-01-01",

end_date = "2019-07-23",

interval = "day",

plans = "Pro8 v1 - Monthly"

)Effect on Trial Starts

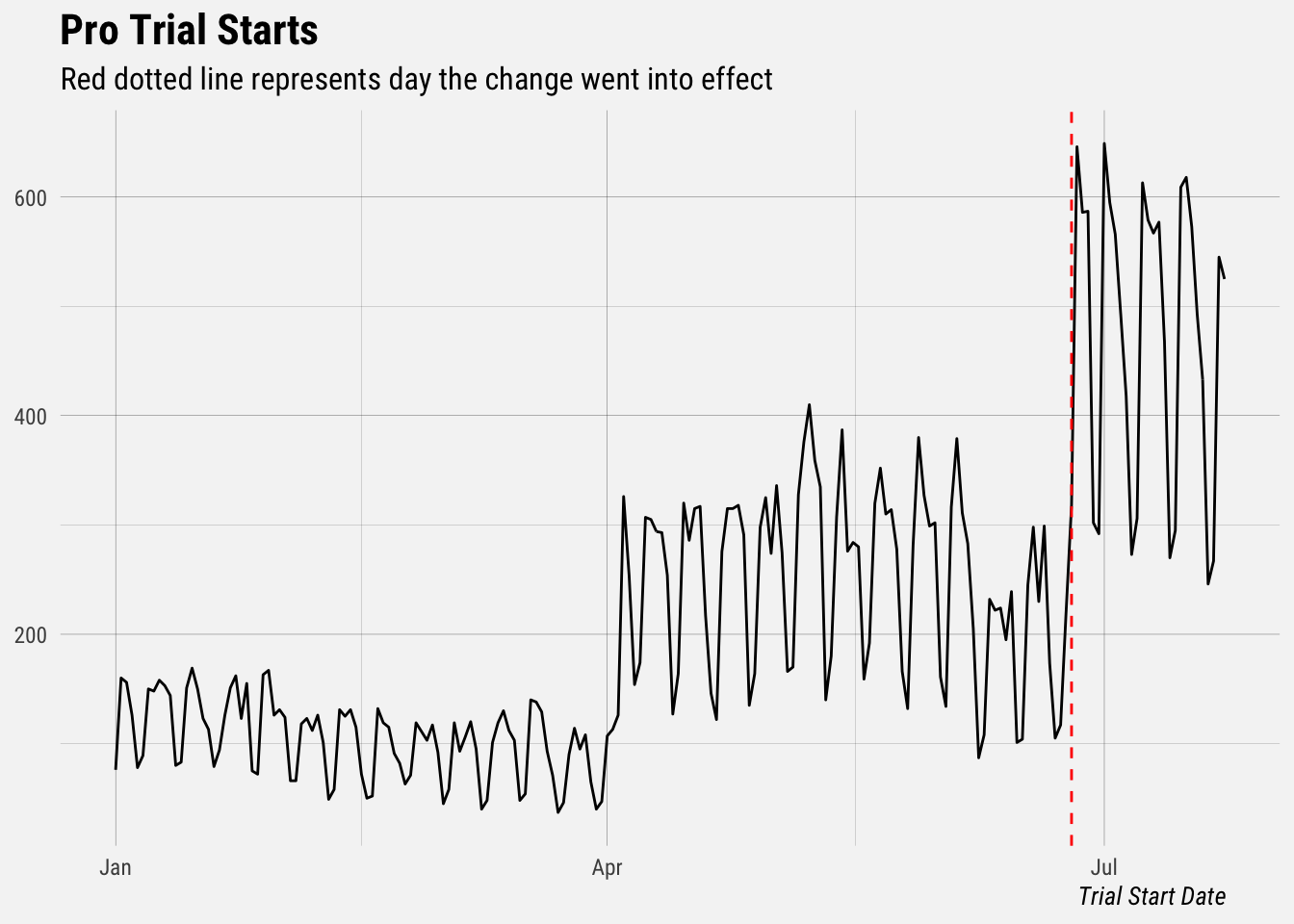

Let’s plot Pro trial starts over time.

We can see that there was a large increase in Pro trial starts on the date this change went into effect. Interestingly, there was also a large increase in Pro trial starts at the beginning of April. What happened there? Was that the date that we directed all signups to the pricing page?

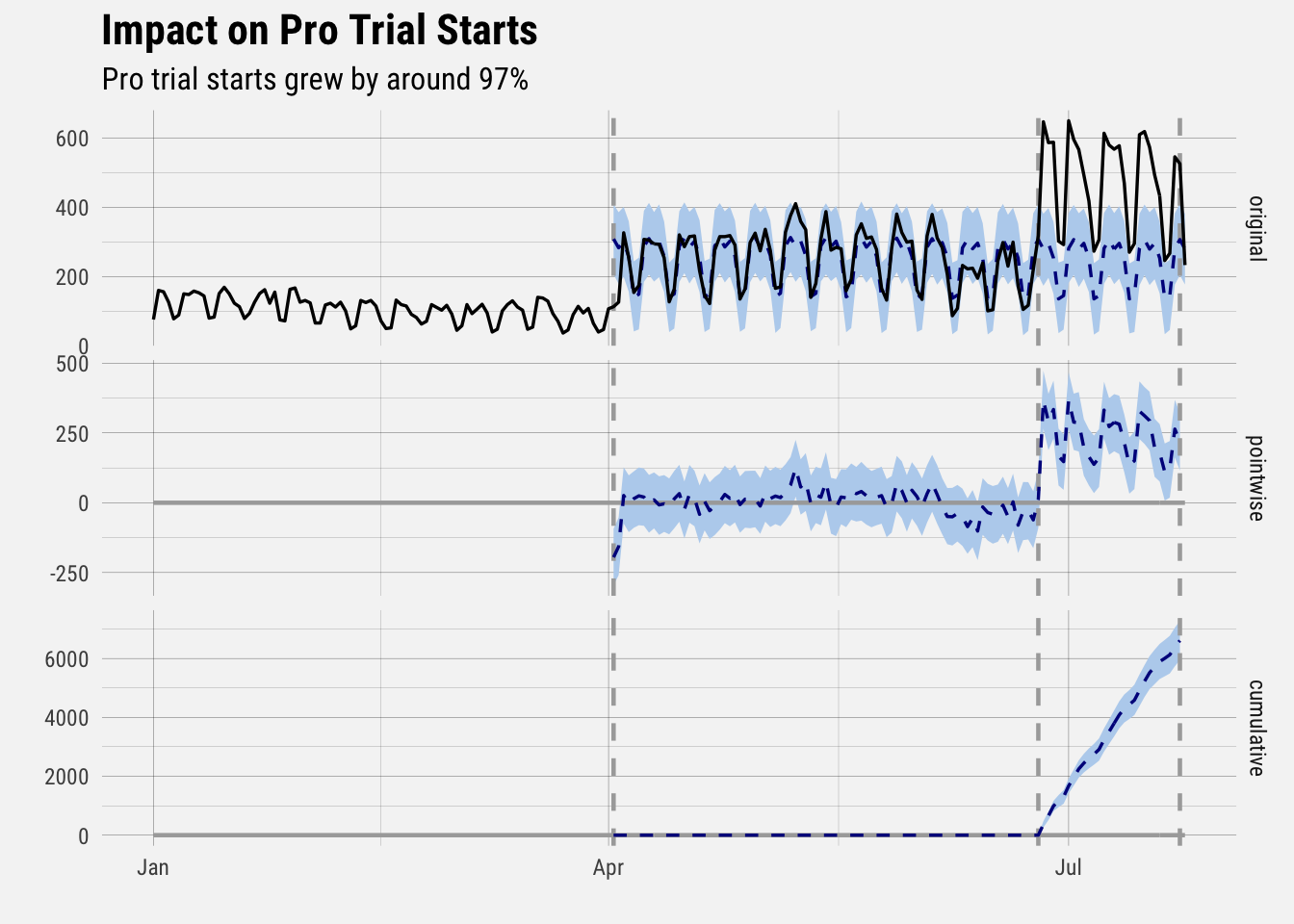

Let’s run a causal impact analysis to calculate the effect on trial starts.

# run analysis

impact <- CausalImpact(trials_ts, pre.period, post.period, model.args = list(niter = 5000, nseasons = 7))

# plot impact

plot(impact) +

labs(title = "Impact on Pro Trial Starts",

subtitle = "Pro trial starts grew by around 97%") +

buffer_theme()

Prior to the changes we made on June 25, and after the change that happened in the beginning of April, the average number of Pro trial starts was 242. After the change went into effect, the average number of trial starts grew to 478. This is a relative increase of around 97%. This means that the positive effect observed during the intervention period is statistically significant and unlikely to be due to random fluctuations.

The probability of obtaining this effect by chance is very small (Bayesian one-sided tail-area probability p = 0). This means the causal effect can be considered statistically significant.

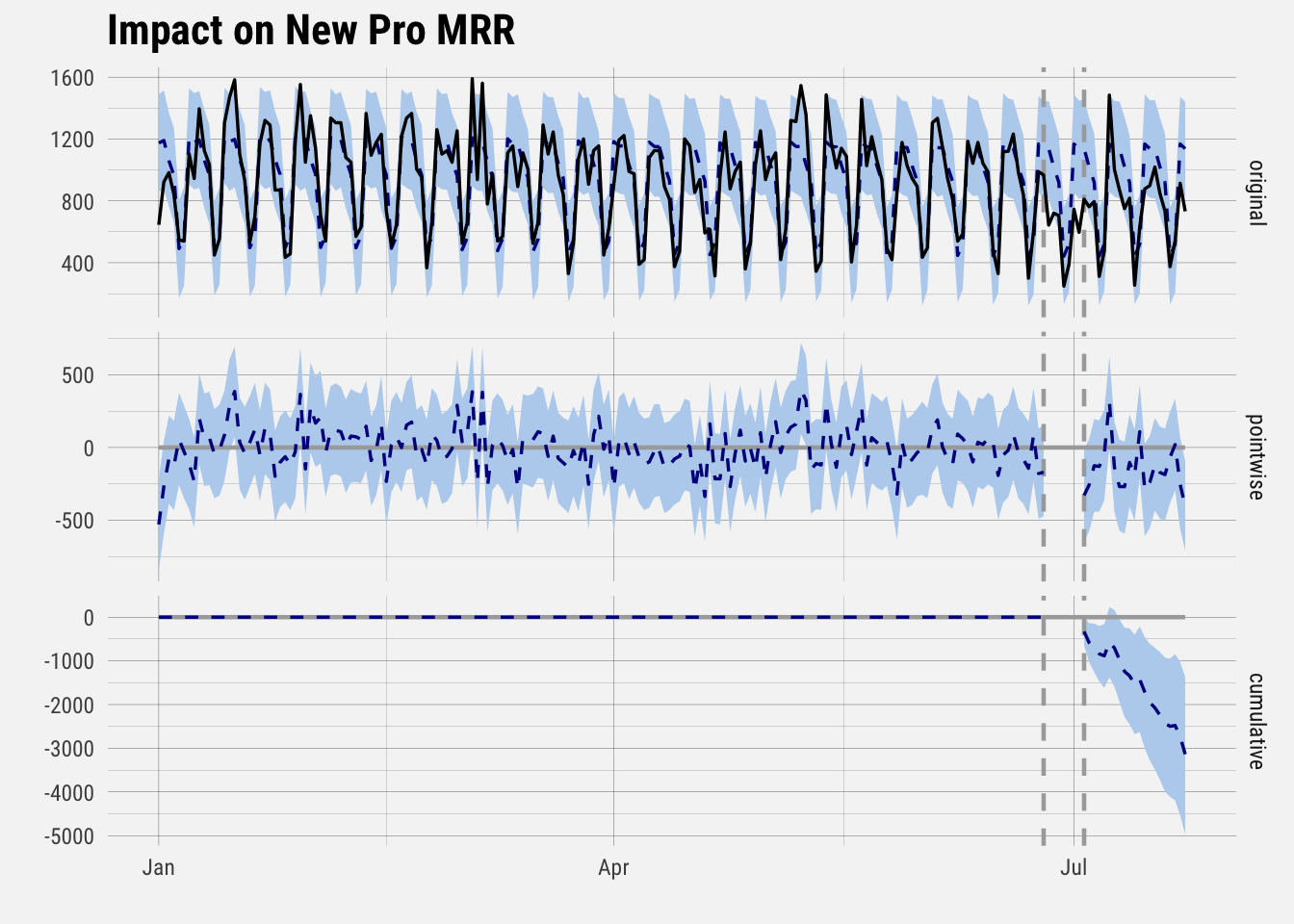

Now let’s look at what we really care about, i.e. Pro MRR.

Effect on Pro MRR

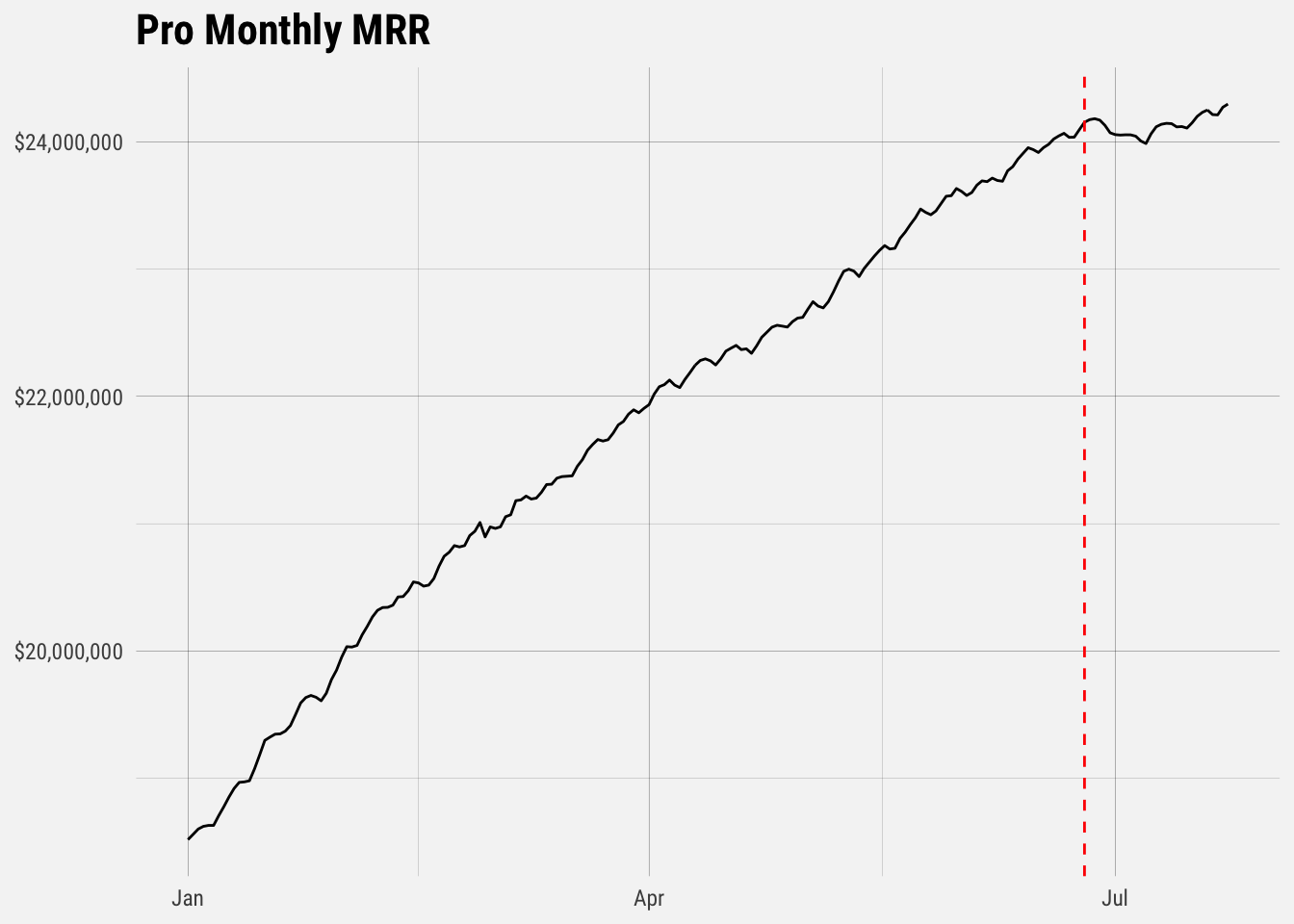

Let’s plot Pro MRR and daily Pro MRR growth.

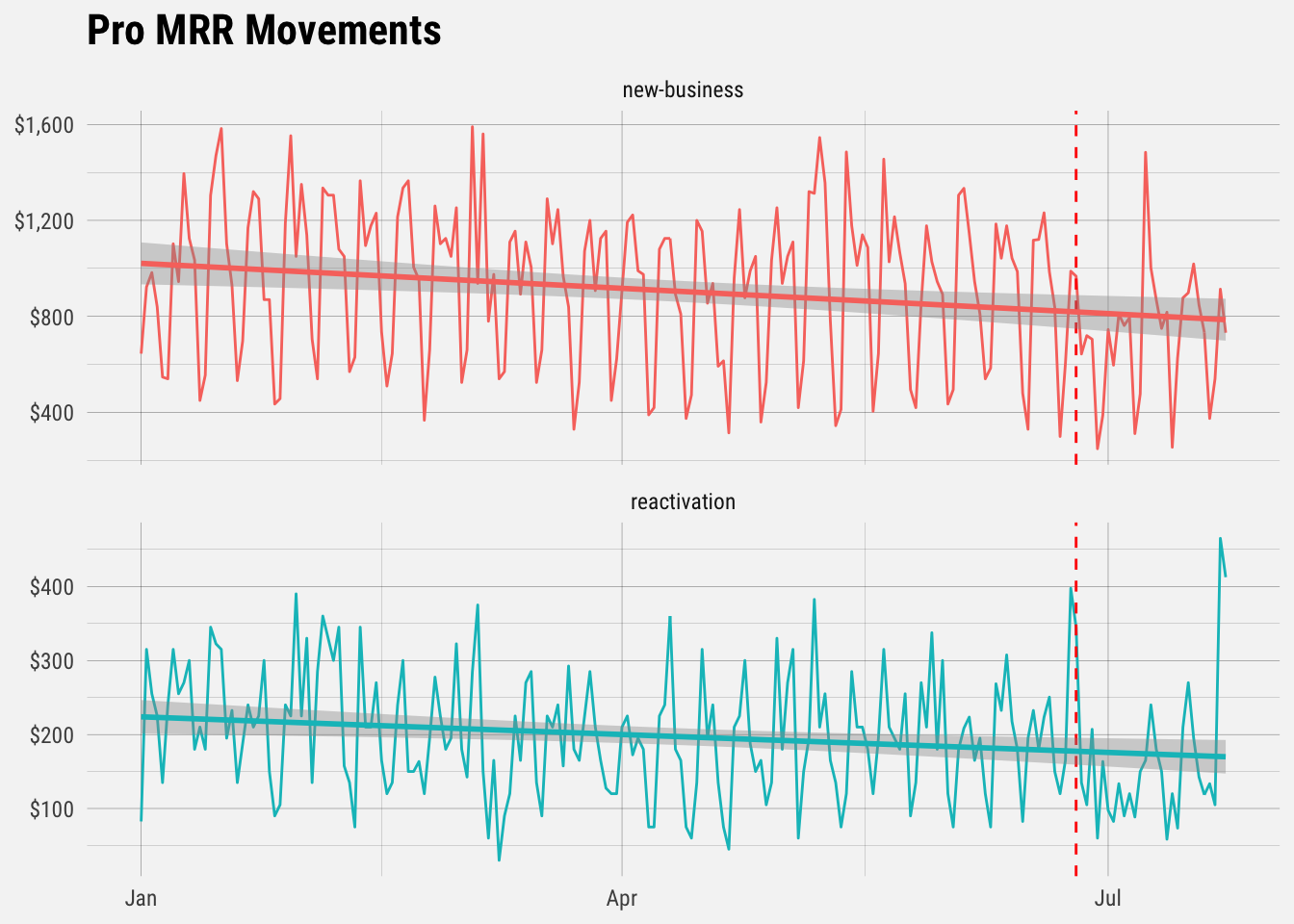

It looks like there is evidence that the changes we made had a negative effect on new and reactivation MRR for the Pro monthly plan. We would expect a decrease in MRR growth for at least 7 days after the change went into effect, as we were replacing what would be direct upgrades with trials that take 7 days. After 7 days, we might expect new MRR to recover. Let’s run a similar causal impact analysis to estimate the effect that this had on new Pro MRR.

# run analysis

impact <- CausalImpact(mrr_ts, pre.period, post.period, model.args = list(niter = 5000, nseasons = 7))

# plot impact

plot(impact) +

labs(title = "Impact on New Pro MRR",

subtitle = NULL) +

buffer_theme()

After 7 days had passed since the change went into effect, new Pro MRR was around 757 per day. Before the change went into effect, new Pro MRR was around 906 per day. This represents a relative decrease of 16% in new Pro MRR. The probability of obtaining this effect by chance is very small (Bayesian one-sided tail-area probability p = 0). This means the causal effect can be considered statistically significant.

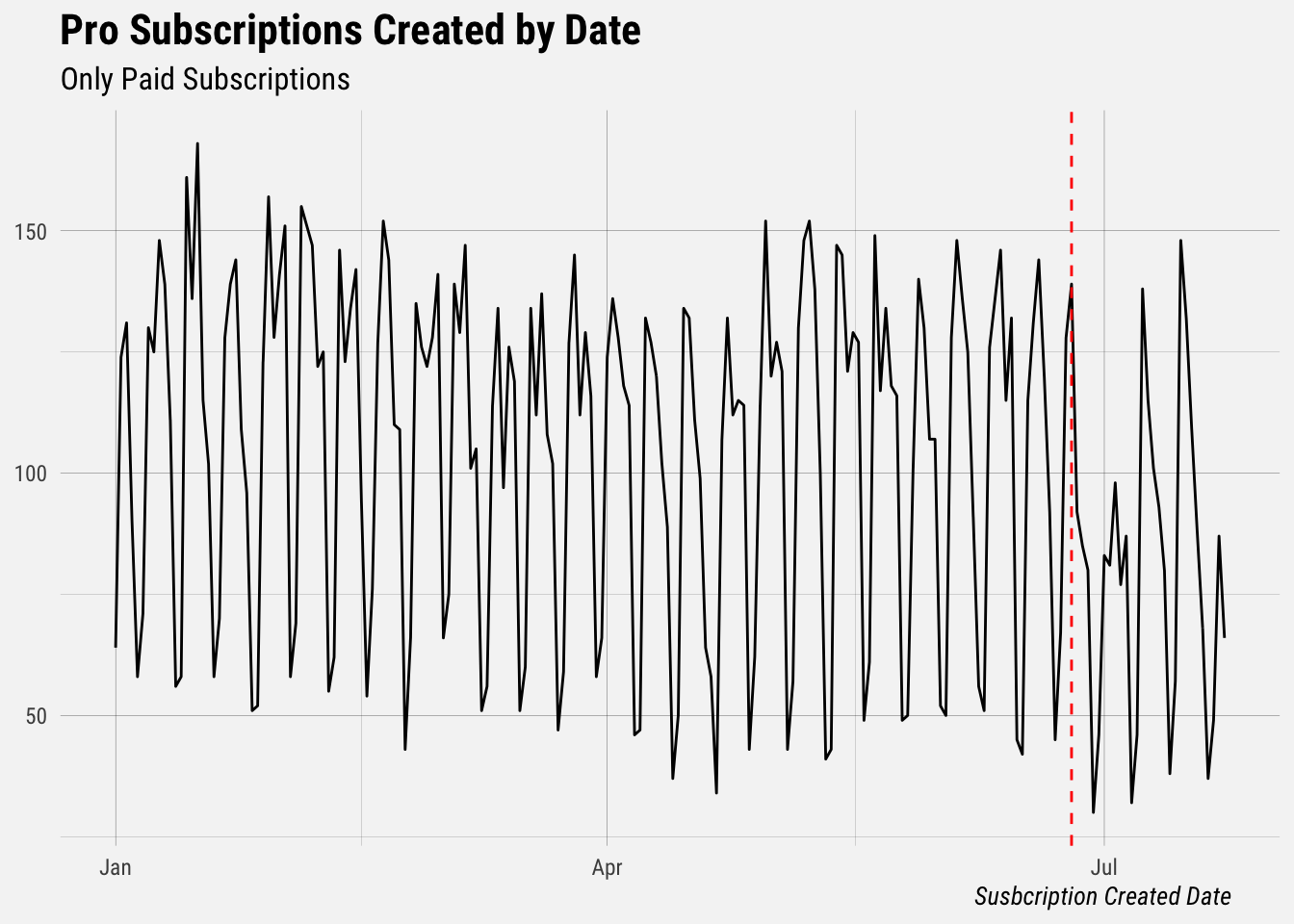

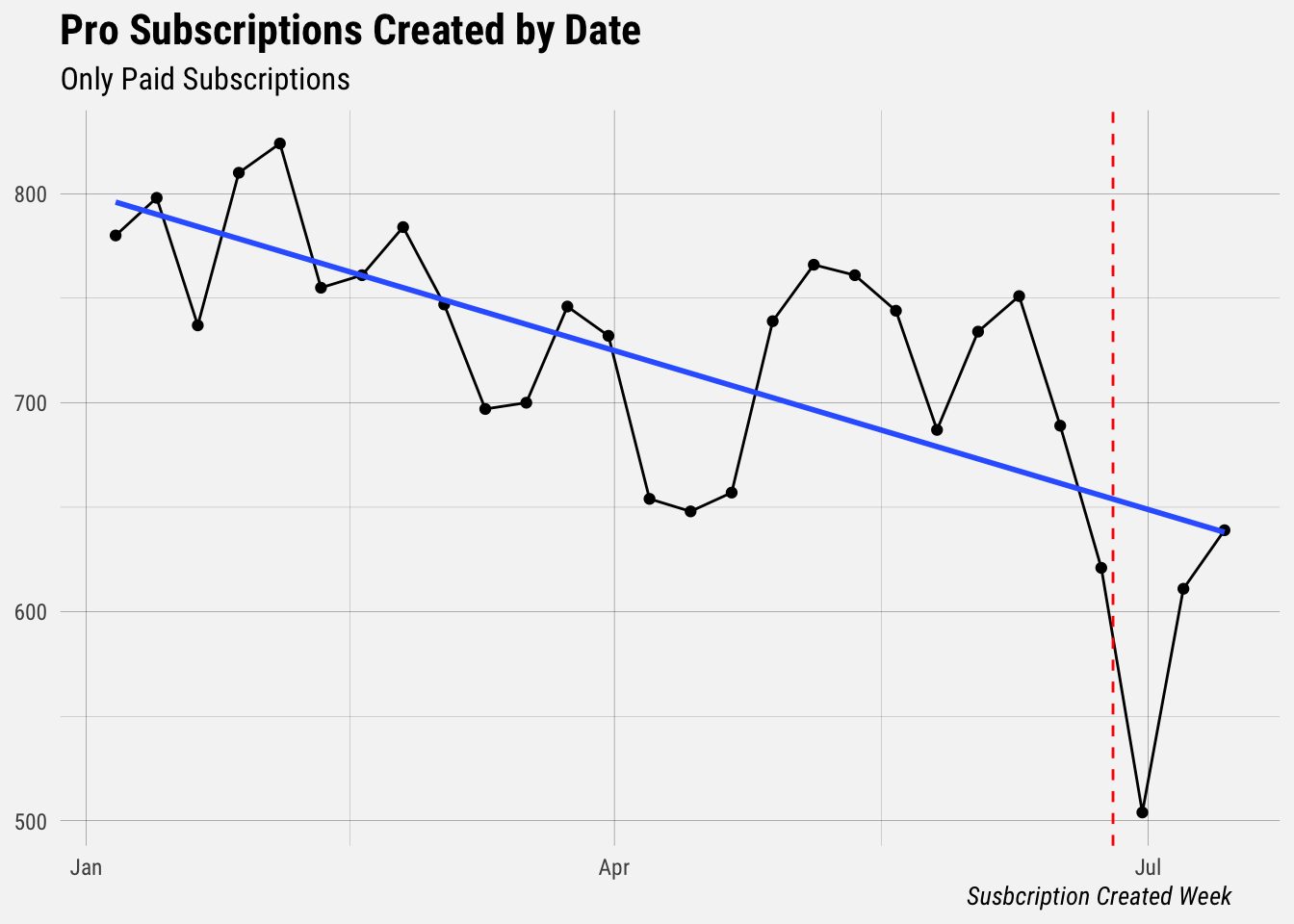

Effect on Subscription Counts

Let’s plot subscription counts over time.

We can see that the data is highly cyclical – the number of Pro subscriptions created on a given date is dependent on the day of the week. There is a noticeable decrease in Pro upgrades once we changed the upgrade paths, which makes sense. We would expect a delay of at least 7 days before we see the number of Pro upgrades recover to previous levels. Let’s view the weekly subscription numbers.

We can see that there is a general negative trend, which is made clear by the blue regression line. It’s also interesting to see that there still appears to be some seasonality – the week of the month might influence how many subscriptions created. It should be noted that subscriptions created earliest have had the most time to finish trials and convert, so we might expect a negative trend like this.